The C hallenge with LLMs Evaluation

hallenge with LLMs Evaluation

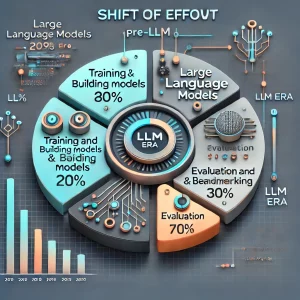

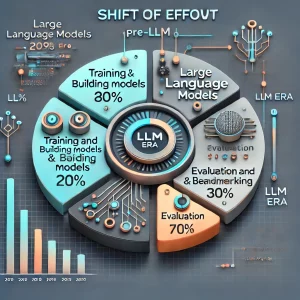

With the advent of large language models (LLMs), the AI landscape transformed dramatically. Developing intelligent systems became more accessible, leading to an explosion of new tools and companies harnessing this technology. However, this evolution introduced new complexities in evaluation and testing.

Before the LLM era:

- Clear Testing Paradigm: Goals were well-defined, with limited and task-specific AI capabilities.

- Controlled Development: AI systems were custom-trained and tested on specific datasets, simplifying evaluation.

In the LLM era:

- Readily Available Models: Models like GPT-4 or similar have democratized access to cutting-edge capabilities.

- Rapid Iteration: Tools can be built and iterated on in weeks or days instead of months or years.

- Complex Issues: The general-purpose nature of LLMs introduces subtleties like bias, hallucinations, and contextual failures, which are harder to identify and evaluate.

Tailored LLM Benchmarks: The New Solution

Tailored LLM Benchmarks: The New Solution

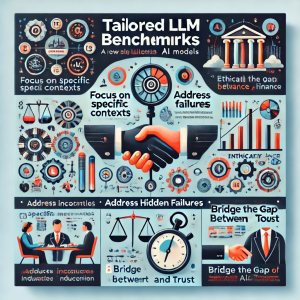

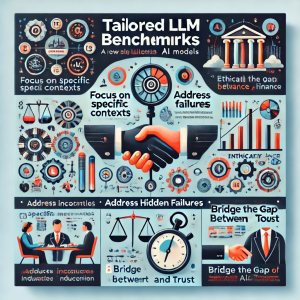

To address these challenges, we need Tailored LLM Benchmarks—customized evaluation frameworks that:

- Focus on Specific Contexts: Benchmarks that evaluate models in the context of specific domains or applications.s

- Address Hidden Failures: Surface issues such as inaccuracies, ethical concerns, or inefficiencies that might remain obscured with general tests.

- Bridge the Gap Between Tools and Trust: Equip developers with actionable insights to refine models and ensure reliability.

hallenge with LLMs Evaluation

hallenge with LLMs Evaluation Tailored LLM Benchmarks: The New Solution

Tailored LLM Benchmarks: The New Solution