The Challenge with LLMs evaluation

The Challenge with LLMs evaluation

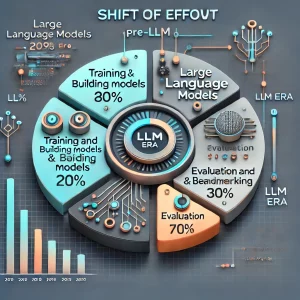

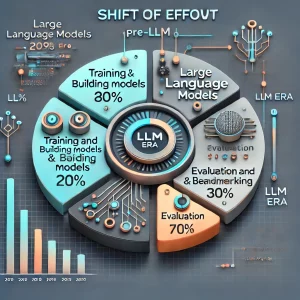

With the introduction of large language models (LLMs), the AI landscape underwent a significant transformation. Building intelligent tools became easier, enabling a surge of new tools and companies leveraging this technology. Before the LLM era, implementing AI was more challenging but relatively straightforward to test, as goals were clearly defined and capabilities limited. However, the advent of LLMs brought unprecedented power with readily available models, enabling rapid iteration and tool development. Yet, this also introduced complex, hidden issues that are harder to detect. Addressing these challenges is the problem we aim to solve with Tailored LLM Benchmarks.

Bespoke LLM Benchmark for Your application

We do hard work with a deep understanding of your unique needs and challenges, and use our expertise to deliver relevant and reliable benchmarks.

We bring to the table:

- Deep NLP and linguistic expertise

- Data engineering excellency

- Deep understanding of language models

Use cases:

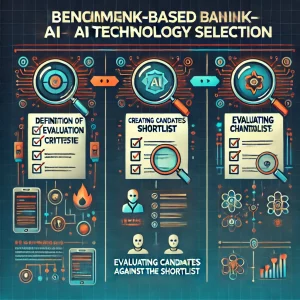

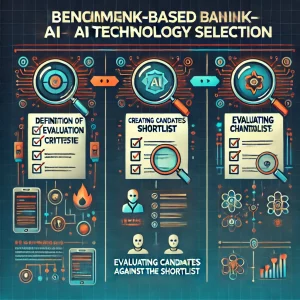

Benchmark based AI technology selection

Benchmark based AI technology selection

Selecting what AI is best for you is far from trivial. The best way to do it – is to define and execute the benchmark tailored to your needs. It is exactly what we are doing,

Our methodology consists from:

- Definition of evaluation criteria

- Creating candidates short list

- Evaluating candidates against short list

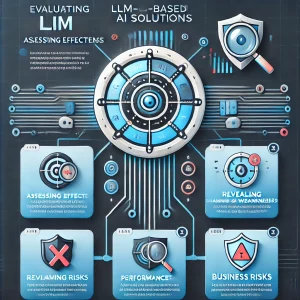

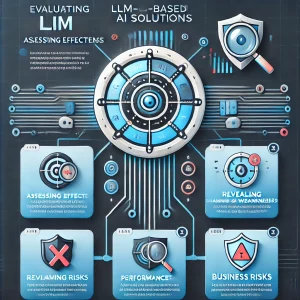

Evaluating Existing LLM-Based Solutions

Our benchmarking services are designed to provide a thorough and accurate assessment of how effectively your AI solutions perform in relation to their intended goals.

We begin by closely examining the specific objectives you aim to achieve with your LLM solution. Through a structured and methodological evaluation of your AI’s behavior, we identify both strengths and weaknesses. This process provides a comprehensive understanding of what aspects of your solution are performing well, which areas require improvement, and what elements may pose significant risks to your business operations.

Our evaluation goes beyond surface-level assessments, offering detailed insights and recommendations tailored to your unique use case. This empowers you to make informed decisions about your AI strategy, ensuring that your solutions are aligned with your business needs and capable of delivering value without compromising operational integrity.

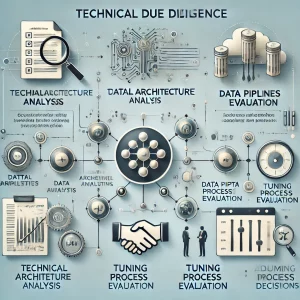

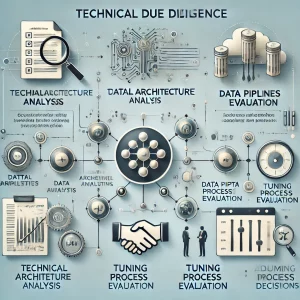

Due-diligence

In addition to assessing the feasibility and reliability of proposed AI solutions, our technical due diligence and benchmarking services provide investors with a strategic advantage in evaluating AI startups. By benchmarking the startup’s technology against industry standards and competitor offerings, we help investors identify unique value propositions, scalability potential, and long-term viability. Our evaluations uncover hidden technical risks, such as unoptimized models, poorly designed data pipelines, or reliance on proprietary datasets, which could impact scalability or compliance. Additionally, we assess the team’s technical expertise, development processes, and adaptability to emerging trends, ensuring that the startup is equipped to thrive in a competitive and rapidly evolving AI landscape. These insights enable investors to not only mitigate risk but also strategically allocate resources to the most promising and impactful AI ventures.

How Does It Work?

How Does It Work?

Although each benchmark-building project may vary depending on specific needs, our process is structured and well-defined to ensure clarity and efficiency. Here is a detailed overview of how we work:

- Initial Meeting to Understand Your Case

We start with a collaborative discussion to gain a deep understanding of your goals, challenges, and requirements. This session ensures we align our efforts with your specific needs and expectations.

- Internal Analysis and Preparation

Our team conducts a thorough internal review based on the information gathered during the initial meeting. This includes analyzing your current systems, identifying potential gaps, and preparing a plan tailored to your use case.

- Common Ideation Session

In this interactive session, we bring together key stakeholders from both sides to brainstorm and refine ideas. This collaborative process helps in finalizing the key aspects of the benchmark, ensuring that it aligns with your objectives.

- Benchmark Building

Using the insights and ideas from previous steps, we design and develop a custom benchmark framework. This includes selecting appropriate metrics, datasets, and methodologies to evaluate the performance of AI solutions effectively.

- Evaluation

The benchmarks are applied to your existing or candidate AI solutions to systematically assess their performance. We evaluate against predefined criteria, uncover strengths and weaknesses, and identify potential risks.

- Building the Summary Report

All findings and insights are compiled into a comprehensive summary report. This document includes detailed results, key observations, and actionable recommendations to guide your decision-making process.

- Hand-Over Meeting

Finally, we present the results to your team in a hand-over meeting. This session ensures you have a clear understanding of the outcomes, the insights gained, and the next steps for implementing improvements or making informed selections.

This structured approach allows us to provide a clear and actionable roadmap tailored to your specific needs, ensuring that your AI solutions meet and exceed your expectations.

What Do We Do for You?

We provide a comprehensive benchmarking service for the LLM solution you currently use or plan to implement. Our process involves analyzing the specific challenges your organization faces, gathering and generating the relevant data necessary for testing, rigorously evaluating the AI’s performance, and delivering a detailed report with findings, insights, and actionable conclusions. Our goal is to ensure that your LLM solution aligns with your business needs and performs as expected.

What Is a Benchmark?

A benchmark is a carefully designed combination of datasets, evaluation techniques, and tailored methodologies used to assess the performance of an LLM. Think of it as an exam for AI, where the questions (data) and scoring methods (evaluation) are customized to ensure relevance to your specific use case. Benchmarks are not one-size-fits-all; they are crafted to measure how well an AI system meets the unique challenges of your domain.

Why Choose Us Over Existing Tools?

Using a benchmarking tool alone is not enough. Tools provide the mechanisms, but it takes expertise and effort to effectively design, implement, and analyze benchmarks. We bring hands-on experience and ensure the work is done meticulously using the best-suited tools for your requirements. Our approach goes beyond simple tool utilization—we tailor every aspect of the process to deliver insights that matter most to you.

Why Not Public LLM Benchmarks?

Relying solely on public LLM benchmarks is akin to hiring someone based purely on their IQ score. Generic tests cannot provide a full understanding of how well an LLM aligns with your specific goals or challenges.

Furthermore, there is a significant risk that an LLM may have “seen” public benchmarks during its training, which could result in inflated performance metrics that do not accurately reflect real-world effectiveness. This makes public benchmarks less reliable for evaluating fitness to your unique needs.

Our tailored approach eliminates these concerns, providing a true and unbiased evaluation that aligns with your business objectives and operational demands.

The Challenge with LLMs evaluation

The Challenge with LLMs evaluation Benchmark based AI technology selection

Benchmark based AI technology selection

How Does It Work?

How Does It Work?